Experts of the POP CoE contributed to the tutorial program of the SC 2021 conference, which was held as an hybrid (in-person and virtual) event.

The topic “Hands-on Practical Hybrid Parallel Application Performance Engineering” was introduced by Markus Geimer, Marc Schlütter and Brian Wylie (JSC) together with colleagues from TU Dresden and the University of Oregon. They presented state-of-the-art performance tools for leading-edge HPC systems founded on the community-developed Score-P instrumentation and measurement infrastructure, demonstrating how they can be used for performance engineering of effective scientific applications based on standard MPI, OpenMP, hybrid combination of both, and increasingly common usage of accelerators. Parallel performance tools from the Virtual Institute - High Productivity Supercomputing (VI-HPS) were introduced and featured in hands-on exercises with Score-P, Scalasca, Vampir, and TAU. They presented the entire workflow of performance engineering, including instrumentation, measurement (profiling and tracing, timing and PAPI hardware counters), data storage, analysis, tuning, and visualization. Emphasis was placed on how tools are used in combination for identifying performance problems and investigating optimization alternatives. Participants could conduct exercises in an AWS-provided, containerized E4S [https://e4s.io] environment containing all the necessary tools. This helped to prepare participants to locate and diagnose performance bottlenecks in their own parallel programs.

The topic “Hands-on Practical Hybrid Parallel Application Performance Engineering” was introduced by Markus Geimer, Marc Schlütter and Brian Wylie (JSC) together with colleagues from TU Dresden and the University of Oregon. They presented state-of-the-art performance tools for leading-edge HPC systems founded on the community-developed Score-P instrumentation and measurement infrastructure, demonstrating how they can be used for performance engineering of effective scientific applications based on standard MPI, OpenMP, hybrid combination of both, and increasingly common usage of accelerators. Parallel performance tools from the Virtual Institute - High Productivity Supercomputing (VI-HPS) were introduced and featured in hands-on exercises with Score-P, Scalasca, Vampir, and TAU. They presented the entire workflow of performance engineering, including instrumentation, measurement (profiling and tracing, timing and PAPI hardware counters), data storage, analysis, tuning, and visualization. Emphasis was placed on how tools are used in combination for identifying performance problems and investigating optimization alternatives. Participants could conduct exercises in an AWS-provided, containerized E4S [https://e4s.io] environment containing all the necessary tools. This helped to prepare participants to locate and diagnose performance bottlenecks in their own parallel programs.

Christian Terboven (RWTH Aachen) with colleagues from AMD and Oracle presented the tutorial "Advanced OpenMP: Host Performance and 5.1 Features". OpenMP is a popular, portable, widely supported, and easy-to-use shared-memory model. Developers usually find OpenMP easy to learn. However, they are often disappointed with the performance and scalability of the resulting code. This may stem from the lack of depth with which OpenMP is employed. The “Advanced OpenMP Programming” tutorial addresses this situation by exploring the implications of possible OpenMP parallelization strategies in terms of correctness and performance. The tutorial focused on performance, such as data and thread locality on NUMA architectures, and exploitation of vector units. All topics were accompanied by extensive case studies, and discussed the corresponding language features in-depth. Throughout all topics, recent additions of OpenMP 5.1 and comment son developments targeting OpenMP 6.0 were also presented.

Christian Terboven (RWTH Aachen) with colleagues from AMD and Oracle presented the tutorial "Advanced OpenMP: Host Performance and 5.1 Features". OpenMP is a popular, portable, widely supported, and easy-to-use shared-memory model. Developers usually find OpenMP easy to learn. However, they are often disappointed with the performance and scalability of the resulting code. This may stem from the lack of depth with which OpenMP is employed. The “Advanced OpenMP Programming” tutorial addresses this situation by exploring the implications of possible OpenMP parallelization strategies in terms of correctness and performance. The tutorial focused on performance, such as data and thread locality on NUMA architectures, and exploitation of vector units. All topics were accompanied by extensive case studies, and discussed the corresponding language features in-depth. Throughout all topics, recent additions of OpenMP 5.1 and comment son developments targeting OpenMP 6.0 were also presented.

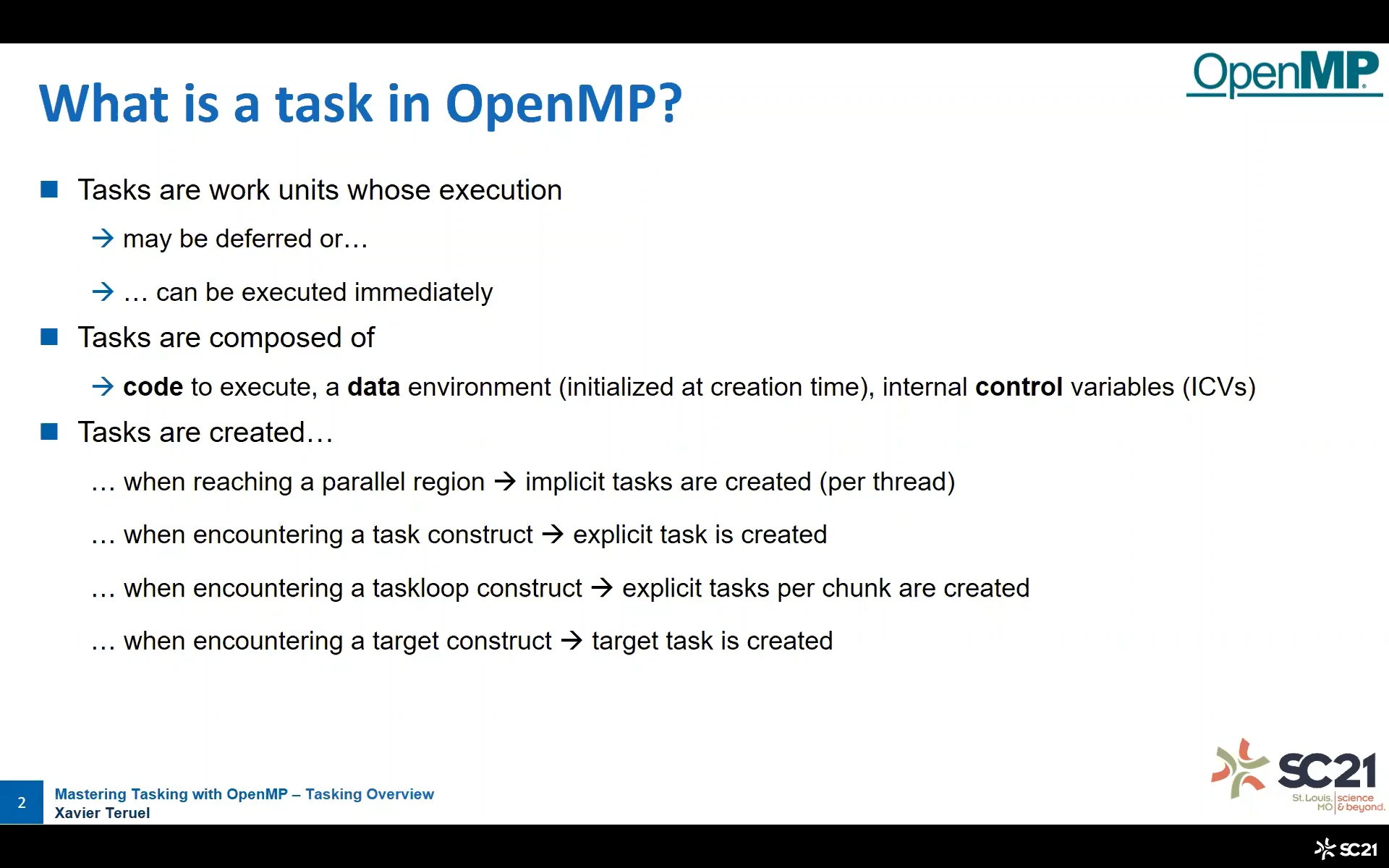

Finally, Xavier Teruel (BSC) and Christian Terboven (RWTH Aachen) together with colleagues from LLNL and AMD explained “Mastering Tasking with OpenMP”. Since version 3.0 released in 2008, OpenMP offers tasking to support the creation of composable parallel software blocks and the parallelization of irregular algorithms. Developers usually find OpenMP easy to learn. However, mastering the tasking concept of OpenMP requires a change in the way developers reason about the structure of their code and how to expose the parallelism of it. This tutorial addressed this critical aspect by examining the tasking concept in detail and presenting patterns as solutions to many common problems. It presented the OpenMP tasking language features in detail and focused on performance aspects, such as introducing cut-off mechanisms, exploiting task dependencies and preserving locality. All aspects were accompanied by extensive case studies.

Finally, Xavier Teruel (BSC) and Christian Terboven (RWTH Aachen) together with colleagues from LLNL and AMD explained “Mastering Tasking with OpenMP”. Since version 3.0 released in 2008, OpenMP offers tasking to support the creation of composable parallel software blocks and the parallelization of irregular algorithms. Developers usually find OpenMP easy to learn. However, mastering the tasking concept of OpenMP requires a change in the way developers reason about the structure of their code and how to expose the parallelism of it. This tutorial addressed this critical aspect by examining the tasking concept in detail and presenting patterns as solutions to many common problems. It presented the OpenMP tasking language features in detail and focused on performance aspects, such as introducing cut-off mechanisms, exploiting task dependencies and preserving locality. All aspects were accompanied by extensive case studies.