The emergence of cloud computing has revolutionized hi-tech business, but as technical power and complexity grows, so do the risks - a single misconfiguration can make efficiency plummet and costs soar. Correct NUMA layout is key to efficient application performance on large cloud servers – if you are doing HPC in the cloud you need to know about NUMA.

What is NUMA and why should I worry about it?

High-end cloud use involves compute clusters made up of many nodes that contain more than one CPU and very large quantities of RAM. This provides enormous computational power in a single node but requires an understanding of how the machine manages its CPUs and memory in order to make the most efficient use of the hardware. Each CPU in the node will have a dedicated section of RAM that is faster to access while the remaining RAM will be slower to access. To get best performance the application must place its data in the correct areas of memory to minimize delays when CPUs access data from memory.

Being NUMA-aware

NUMA stands for non-uniform-memory-access, a rather opaque term describing how different areas of memory will be faster or slower to read and write based on their physical locations. Ideally, applications intended to run on high performance and cloud computers should be fully NUMA aware. This requires applications to query the machine for appropriate information about its physical layout and request optimally located resources be allocated when requesting memory and CPU cores. This is clearly nontrivial to implement, however, even if the application is not written in this way there are other ways to control memory allocation to improve performance. Tools such as numactl are available which can set different NUMA profiles for applications depending on their needs to try to ensure resources are allocated in a close to optimal manner. Threading libraries will often also provide some support for managing memory layouts. For example, OpenMP, used for multithread shared memory programs, has mechanisms for the user to describe the NUMA layout of a node and optimize data placement accordingly.

The technical details

In a system with a single CPU hardware layout is normally very straightforward: all the RAM is attached directly to the CPU and can be accessed quickly. However, in the case of a large system with multiple CPUs the RAM is typically divided between the CPUs. This means each CPU can only access some memory directly and must access the rest by communication with the other CPUs via a high-speed interconnecting bus.

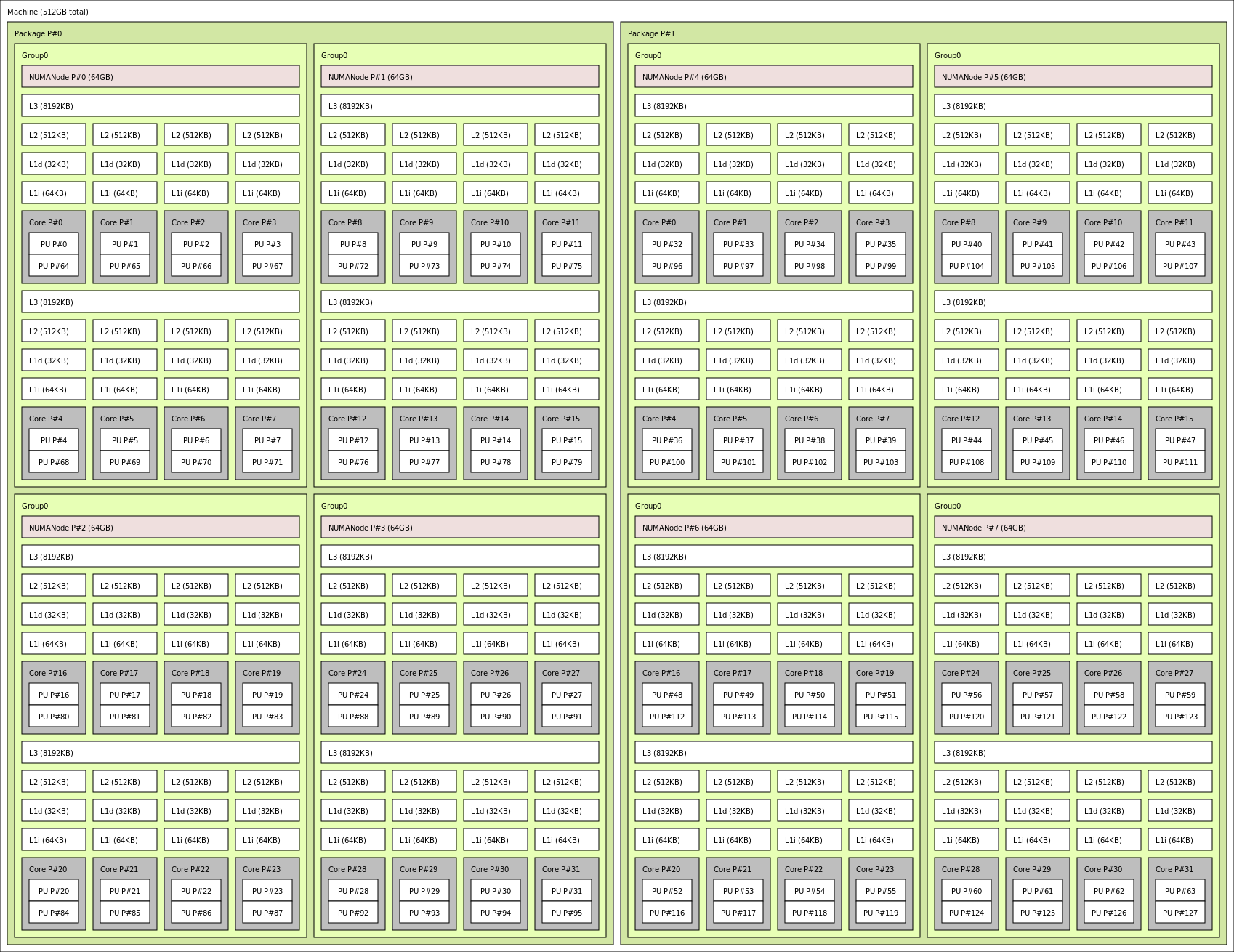

Fig. 1. The hardware layout of a modern 64 core compute node, with 512GB RAM

divided between 8 NUMA nodes each with 8 CPU cores.

The problem with this is that communication through the interconnect has a higher latency than communication with the locally attached RAM, and when the CPU requires data from a portion of RAM attached to another CPU it must wait longer for it to arrive than from local RAM, stalling the ongoing calculation while it waits, and wasting valuable CPU time. As a result, it is preferable to ensure that the data belonging to a process or thread running on a certain CPU has its data stored in the area of RAM directly attached to that CPU.

For example, on a modern dual socket Intel® Xeon® system the delay for indirect memory access via the system bus is 150ns, almost twice that of direct memory accesses at 80 ns1. So, if your application is misconfigured to use an inefficient memory layout you could end up effectively doubling your cloud costs by unnecessary waiting for slow memory!

Find out more

For more information on HPC optimization and efficiency, or to find out how to get free expert assistance with your HPC applications, visit us at https://pop-coe.eu

1 Dual socket Intel® Xeon® Gold 6148, measured using the Intel “MLC” memory latency checker tool